Having concern about an insider threat doesn’t mean there is one, and not having concern doesn’t mean there isn’t. The question, like any question about risk prior to taking informed action, is “how much of a threat?”.

On a five-year average, 26% of all breaches happen because of activity internal to the environment. These internal breaches are made up of either human error or malicious use from employees or contractors.

When the numbers are collected and reviewed, the villainous insider is far less a threat than the media and security tool dealers would have everyone believe.

What Level of Cyber Threat are Insiders?

The disgruntled employee threat is real but often overblown. Published findings analyzing data breaches and their threat actors (external or internal) are not rare but often clouded with ulterior motives (selling a product), or poor analyses and data collection methodologies.

The below chart is compiled from 5 years of reports published by the Verizon in concert with the Secret Service (this report is the industry gold standard). It shows the percent of breaches with an internal threat actor – where internal threat actor is either a form of misuse or error.

| Industry* | 2021 | 2020 | 2019 | 2018 | 2017 |

|---|---|---|---|---|---|

| Accommodation and Food Services | 10% | 22% | 5% | 1% | 4% |

| Arts, Entertainment & Recreation | 31% | 33% | 0% | 0% | 0% |

| Education | 20% | 33% | 45% | 19% | 30% |

| Financial and Insurance | 44% | 35% | 36% | 7% | 6% |

| Healthcare | 39% | 48% | 42% | 56% | 68% |

| Information | 37% | 34% | 44% | 23% | 3% |

| Manufacturing | 19% | 25% | 30% | 13% | 7% |

| Mining, Quarrying, Oil | 2% | 28% | 0% | 0% | 0% |

| Professional, Scientific and Technical Services | 26% | 22% | 21% | 31% | 0% |

| Public Admin | 17% | 43% | 30% | 34% | 40% |

| Retail | 17% | 27% | 19% | 7% | 7% |

| Average | 24% | 32% | 30% | 21% | 21% |

The aggregated average of the error type for 2021 was 17% (down from 2020’s 22% and not shown on the above chart). Errors are non-malicious, accidental alterations in access leading to a breach.

A couple examples of errors leading to a breach (unintended exposure of data) include:

- Remoted into desktop, disabled firewall to make a change and failed to re-enable firewall.

- Inadvertently placed medical transcription files on FTP site that was configured in a way that allowed access to the transcription documents from the Web.

(Note: The error type of internal threat actor in this data does not include employees getting phished.)

This leaves about 7% as the aggregated average as the misuse type. This misuse type is the intentional misuse of access for an unauthorized reason (monetary, revenge, etc.). This does not mean that 7% of every organization’s employee population is a malicious insider. This means, of all the breaches Verizon analyzed in 2021, only 7% were from internal, intentional misuse.

The misuse type is often the type warned about in marketing material and news broadcast. Stories from revenge to espionage titillate the buyer and viewer. “Watch out for the unscrupulous employee ready to sell data.”

The reality, it would seem, is less James Bond and more Three Stogies.

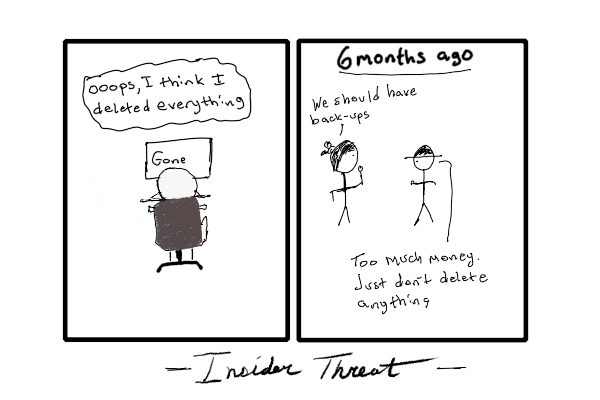

Employee error is the real insider threat

So, the internal chest-burster waiting for the opportune moment to explode forth destroying the host, though not a myth, is rare. Edward Snowden is not the norm, he’s an outlier.

Whether or not a business decides it needs to have a plan for the outlier is much like deciding if Kansas should have an earthquake emergency plan – not a terrible idea but there may be more pressing threats to plan for first.

How to Reduce the Risk of an Insider Threat?

It would appear that the vast majority of the so-called insider threat is a result of employees making honest mistakes. Of course, an honest mistake does not bring back the stolen data or remove the ransomware. If your organization is going to do anything about the “insider problem” then it first needs to understand what problem it is trying to solve – error, malice, or both.

Since there is yet no pill, process, or policy eliminating error or malice then the best solution one can hope for is a reasonable reduction of both the cause (error/malice) and the effect (impact of breach/data exposure).

The existence a large variety of complex IT environments and process means a variety of solutions will exist. These solutions will constitute part of the businesses’ overall cyber security strategy, but should meet these requirements:

- Security policies that are easy to understand, implement, and enforce

- If the typical end-user won’t understand the policy, they won’t follow it.

- If the policy is overly complex incorporating every conceivable security measure, it gets ignored.

- If the policy violation has no consequences, people will stop caring.

- Security policies enhancing security without frustrating employees

- It’s easy to enhance security (follow these 25 steps to safely share a file), but if it frustrates employees, they will find ways around it.

- Technical controls that reduce the cause and effect of the insider problem without reducing productivity

- Automation: the more manual involvement in a process the greater the chance of the introduction of an undetectable error. That is, until it turns into a detectable breach.

- Efficiency: find and eliminate any duplication of effort. Parallel process increases the chance of error.

- Access: Don’t give anyone anymore access then they need to do the job. Sometimes that the granting of temporary access that gets automatically removed (remember reducing human involvement). And make sure remove access as soon as an employee leaves the organization.

There is probably something to a welcoming work environment and reasonable pay to help reduce the risk of the intentional misuse insider – the one all the insider threat programs are going on about – but I’ll leave that to other articles on the subject on “employee appreciation”. But with that said, employee appreciation (I.E. a good culture) does qualify as legitimate cybersecurity control.

Don’t let the insider threat hype suck you into spending money on an expensive behavioral monitoring solutions that only shave a hair off your overall risk. Do take the time to understand what kinds of things could go wrong through error and misuse and implement reasonable policies, process, and technology to reduce the overall risk.

*Data is pulled from the Version Breach reports going back to 2017, though my own analysis and number “number crunching” was conducted in some cases.